Having a car accident makes you have a lot of free time.

So I decided to create the ultimate Linux filesystems benchmark, took a spare hard disk I had available and tested all the filesystems writable by my Linux system, a 2.6.33-gentoo-r2 kernel.

For the tests I used bonnie++ as root, with the command line "bonnie++ -d /mnt/iozone/test/ -s 2000 -n 500 -m hpfs -u root -f -b -r 999".

My Linux system suppots a quite big number of writable filesystems, being: AFFS, BTRFS, ext2, ext3, ext4, FAT16, FAT32, HFS, HFS+, HFSX, HPFS, JFS, NTFS, Reiser, UFS, UFS2, XFS and ZFS.

I will divide the filesystems in "foreign unsupported", "foreign supported" and "native" filesystems.

A "foreign unsupported" is a filesystem designed and created outside Linux, for another operating system, but it's lacking features needed by Linux to run flawlessly, like the POSIX permissions, links and so on. These are AFFS, FAT16, FAT32, HFS and HPFS.

A "foreign supported" is a filesystem designed and created outside Linux, for another operating system, but supporting all features needed by Linux to run flawlessly, even if the current Linux implementation do not implement them fully. These are HFS+, HFSX, JFS, NTFS, UFS, UFS2, XFS and ZFS.

A "native" is a filesystem designed by the Linux community of developers, with Linux in mind, and usually never found outside Linux systems. These are BTRFS, ext2, ext3, ext4 and Reiser.

AFFS, or Amiga Fast File System is the old AmigaOS 2.0 filesystem, still in use by AROS, AmigaOS and MorphOS operating systems. It does not support the features required by Linux and is not an option for disks containing anything but data.

BTRFS, or B-Tree File System is a filesystem specifically designed to substitute old Linux native filesystems, including online migration of data from the extX filesystems.

ext2, or Extended 2 File System is an evolution of the original Linux ext filesystem, designed to overcome the limits of the Minix filesystems used in first Linux versions. ext3 is an evolution that added journaling and ext4 is another evolution that changes some structures to newer and faster technologies.

FAT, or File Allocation Table, is the original Microsoft filesystem, introduced with Microsoft Disk BASIC in the 80s and extensively used for data interchange, mobile phones, photo cameras, so on. FAT16 is an evolution of the original FAT (retroactively called FAT12) to allow for bigger disks, and FAT32 is the ultimate evolution introduced in Windows 95 OSR2 to overcome all the limitations. However Microsoft abandoned it in favor of NTFS for hard disks and ExFAT (currently not writeable by Linux) for data interchange.

HFS, or Hierarchical File System, is the original Apple filesystem introduced with Mac OS 5.0. It's design is heavily influenced by Mac OS design itself, requiring support for resource forks, icon and window position, etc.

HFS+, or Hierarchical File System Plus is an evolution of HFS to modernize it including support for UNIX features, longer file names, multiple alternate data streams (not only the resource forks). Current Linux implementation of HFS+ does not support all features from it, like for example journaling. HFSX is a variant of HFS+ that adds case-sensitive behavior, so that files "foo" and "Foo" are different ones.

HPFS, or High Performance File System is a filesystem designed by Microsoft for OS/2 to overcome the limitations of the FAT filesystem.

JFS, or Journaled File System is a filesystem designed by IBM for AIX, a UNIX variant, and so it supports all the features required by Linux. Really it's name is JFS2, as JFS is an older AIX filesystem that was deprecated before JFS2 was ported to Linux.

NTFS, or New Technology File System, is a filesystem designed by Microsoft for Windows NT to be the server-perfect filesystem. It was designed to support all features needed by all the operating systems it pretended to serve: OS/2, Mac OS, DOS, UNIX. Write support is not available directly in the Linux kernel but in userspace as NTFS-3G, and it does not implement all the features of the filesystem, neither all those required by Linux.

Reiser filesystem is a filesystem designed by Hans Reiser to overcome the performance problems of the ext filesystems and to add journaling. Its last version, Reiser4, is not supported by the Linux kernel because of reasons outside the scope of this benchmark.

UFS, or UNIX File System, also called BSD Fast File System is a filesystem introduced by the BSD 4 UNIX variant. UFS2 is a revision introduced by FreeBSD to add journaling and overcome some of the limitations. Support for both filesystem has been "experimental" in Linux for more than a decade.

XFS, or eXtended File System, is a filesystem introduced by Silicon Graphics for IRIX, another UNIX variant, to overcome the limitations of their previous filesystem, EFS. Linux's XFS is slightly different from IRIX's XFS, however both are interchangeable.

ZFS, or Zettabyte File System, is a filesystem introduced by Sun Microsystems for their Solaris UNIX variant. It introduces a lot of new filesystem design concepts and is still being polished. Support on Linux is provided by an userspace driver and not in the kernel itself.

The tests check the speed to write a 2 Gb file, rewrite it, read it, re-read it randomly, create 500 files, read them and delete them.

AFFS, FAT and HPFS filesystems failed the create/read/delete files test with some I/O error. In their native operating systems they have no problem to create 500 files so it's a problem in the Linux's implementation.

The output from Bonnie++ is:

|

Size |

Per Char |

Block |

Rewrite |

Per Char |

Block |

Num Files |

Create |

Read |

Delete |

Create |

Read |

Delete |

|

K/sec |

% CPU |

K/sec |

% CPU |

K/sec |

% CPU |

K/sec |

% CPU |

K/sec |

% CPU |

/sec |

% CPU |

|

/sec |

% CPU |

/sec |

% CPU |

/sec |

% CPU |

/sec |

% CPU |

/sec |

% CPU |

/sec |

% CPU |

| 2000M |

|

|

6014 |

2 |

5065 |

3 |

|

|

14887 |

5 |

115.2 |

9 |

|

|

|

|

|

|

|

|

|

|

|

|

|

| Latency |

|

9545ms |

10553ms |

|

16000ms |

842ms |

Latency |

|

|

|

|

|

|

| 2000M |

|

|

26126 |

11 |

14151 |

8 |

|

|

69588 |

5 |

35.0 |

262 |

500 |

14 |

72 |

55300 |

99 |

13 |

56 |

14 |

72 |

35273 |

99 |

8 |

47 |

| Latency |

|

431ms |

626ms |

|

166ms |

6071ms |

Latency |

6067ms |

1712us |

32642ms |

6632ms |

934us |

7227ms |

| 2000M |

|

|

27592 |

4 |

13381 |

3 |

|

|

57779 |

3 |

424.1 |

5 |

500 |

84 |

63 |

401150 |

99 |

114 |

0 |

98 |

70 |

745 |

99 |

97 |

28 |

| Latency |

|

326ms |

526ms |

|

23808us |

279ms |

Latency |

522ms |

214us |

31756ms |

483ms |

8831us |

878ms |

| 2000M |

|

|

27190 |

9 |

13522 |

3 |

|

|

58337 |

4 |

392.0 |

6 |

500 |

177 |

1 |

293176 |

99 |

129 |

0 |

177 |

1 |

392430 |

99 |

154 |

0 |

| Latency |

|

754ms |

626ms |

|

49656us |

193ms |

Latency |

859ms |

876us |

1200ms |

844ms |

205us |

1497ms |

| 2000M |

|

|

28852 |

5 |

13832 |

3 |

|

|

57969 |

3 |

366.6 |

5 |

500 |

246 |

2 |

281186 |

99 |

155 |

1 |

246 |

2 |

372310 |

99 |

202 |

1 |

| Latency |

|

4026ms |

658ms |

|

97049us |

183ms |

Latency |

903ms |

869us |

1061ms |

900ms |

183us |

951ms |

| 2000M |

|

|

28201 |

6 |

13317 |

4 |

|

|

55201 |

3 |

354.9 |

6 |

|

|

|

|

|

|

|

|

|

|

|

|

|

| Latency |

|

326ms |

230ms |

|

103ms |

269ms |

Latency |

|

|

|

|

|

|

| 2000M |

|

|

26065 |

6 |

13317 |

4 |

|

|

54575 |

3 |

333.0 |

6 |

|

|

|

|

|

|

|

|

|

|

|

|

|

| Latency |

|

326ms |

230ms |

|

103ms |

269ms |

Latency |

|

|

|

|

|

|

| 2000M |

|

|

28761 |

5 |

13272 |

5 |

|

|

50507 |

11 |

324.8 |

3 |

|

|

|

|

|

|

|

|

|

|

|

|

|

| Latency |

|

426ms |

526ms |

|

16808us |

150ms |

Latency |

|

|

|

|

|

|

| 2000M |

|

|

28705 |

3 |

13201 |

2 |

|

|

57350 |

4 |

472.7 |

2 |

500 |

3864 |

29 |

3513 |

99 |

5342 |

96 |

5338 |

43 |

248171 |

99 |

7754 |

67 |

| Latency |

|

426ms |

426ms |

|

18678us |

281ms |

Latency |

100ms |

341ms |

3064us |

16396us |

165us |

753us |

| 2000M |

|

|

28805 |

3 |

13353 |

2 |

|

|

57481 |

4 |

482.1 |

2 |

500 |

3899 |

28 |

3547 |

99 |

5451 |

96 |

5431 |

43 |

255570 |

99 |

7859 |

66 |

| Latency |

|

227ms |

410ms |

|

24543us |

212ms |

Latency |

152ms |

338ms |

2542us |

14442us |

211us |

1452us |

| 2000M |

|

|

25198 |

19 |

13488 |

6 |

|

|

47790 |

11 |

159.1 |

4 |

500 |

6980 |

97 |

216652 |

99 |

15720 |

46 |

7393 |

82 |

329146 |

99 |

16662 |

56 |

| Latency |

|

|

|

|

|

|

Latency |

14546us |

1853us |

3224us |

9178us |

180us |

1510us |

| 2000M |

|

|

28486 |

4 |

13855 |

2 |

|

|

59308 |

3 |

546.6 |

3 |

500 |

7836 |

25 |

379769 |

99 |

2289 |

8 |

218 |

2 |

392966 |

99 |

96 |

0 |

| Latency |

|

679ms |

626ms |

|

34570us |

136ms |

Latency |

179ms |

316us |

747ms |

2523ms |

558us |

67889ms |

| 2000M |

|

|

28324 |

6 |

12480 |

3 |

|

|

55439 |

5 |

156.5 |

1 |

500 |

5822 |

12 |

11353 |

18 |

8403 |

14 |

6280 |

14 |

13918 |

18 |

1025 |

2 |

| Latency |

|

52838us |

127ms |

|

20516us |

296ms |

Latency |

130ms |

144ms |

444ms |

108ms |

25397us |

427ms |

| 2000M |

|

|

27557 |

11 |

13567 |

4 |

|

|

58166 |

5 |

462.2 |

9 |

500 |

1704 |

10 |

328480 |

99 |

1785 |

10 |

1498 |

9 |

398475 |

99 |

448 |

3 |

| Latency |

|

626ms |

683ms |

|

14264us |

164ms |

Latency |

1299ms |

657us |

1882ms |

1269ms |

207us |

2843ms |

| 2000M |

|

|

27478 |

11 |

13472 |

4 |

|

|

58109 |

5 |

459.7 |

9 |

500 |

1458 |

8 |

319832 |

99 |

1404 |

8 |

1284 |

7 |

395686 |

99 |

396 |

3 |

| Latency |

|

626ms |

526ms |

|

13669us |

202ms |

Latency |

2405ms |

672us |

2078ms |

1424ms |

205us |

3293ms |

| 2000M |

|

|

4484 |

4 |

14018 |

4 |

|

|

57305 |

7 |

417.1 |

8 |

500 |

102 |

86 |

417263 |

99 |

1252 |

6 |

103 |

87 |

414744 |

99 |

176 |

57 |

| Latency |

|

410ms |

567ms |

|

81779us |

185ms |

Latency |

488ms |

164us |

110ms |

469ms |

537us |

497ms |

| 2000M |

|

|

4472 |

4 |

13333 |

3 |

|

|

56329 |

6 |

431.9 |

7 |

500 |

100 |

87 |

419216 |

99 |

1257 |

6 |

102 |

87 |

418432 |

99 |

161 |

53 |

| Latency |

|

426ms |

331ms |

|

100ms |

512ms |

Latency |

487ms |

185us |

111ms |

467ms |

51us |

537ms |

| 2000M |

|

|

28707 |

4 |

13759 |

3 |

|

|

55647 |

4 |

408.4 |

5 |

500 |

559 |

5 |

70286 |

98 |

557 |

4 |

637 |

6 |

110524 |

99 |

102 |

0 |

| Latency |

|

5226ms |

530ms |

|

29344us |

260ms |

Latency |

1555ms |

14286us |

1897ms |

1421ms |

277us |

8480ms |

| 2000M |

|

|

19540 |

3 |

12844 |

2 |

|

|

32664 |

2 |

152.5 |

1 |

500 |

753 |

4 |

37453 |

10 |

142 |

0 |

781 |

4 |

399620 |

99 |

51 |

0 |

| Latency |

|

2562ms |

2926ms |

|

261ms |

2268ms |

Latency |

2531ms |

61859us |

2965ms |

2800ms |

203us |

5209ms |

The table is quite long so comparing graphs following:

This is the table for a single file sequential writing speed. The UFS and AFFS denote an abnormally slow speed, indicating a big need for optimization.

The fastest ones are, without a big difference, ext4, HFSX and XFS.

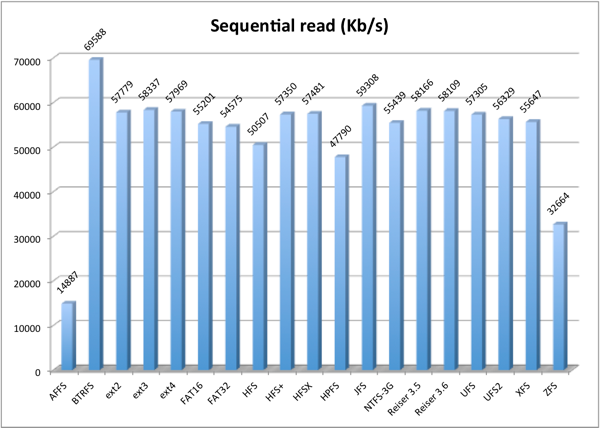

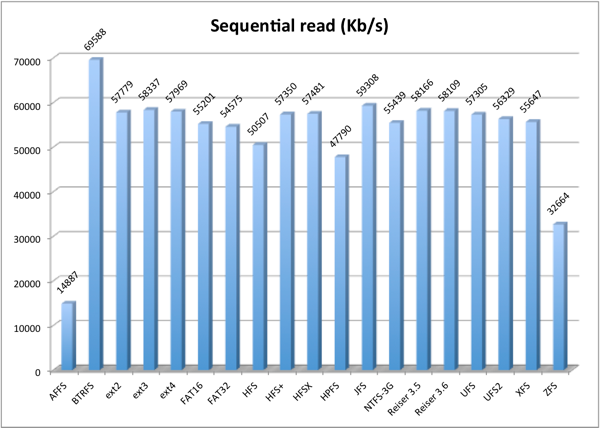

This is the table for a single file sequential reading speed. AFFS needs optimization also here.

The fastest one with a big difference is BTRFS and the second fastest one is JFS.

This two tables indicate the speed on writing and reading big files. If this is your intended usage, the recommended ones are BTRFS, ext4, HFSX, HFS+, XFS and JFS.

However if you need the maximum portability between systems, HFS+ is the choice because it's readable and writable by more operating systems than the others.

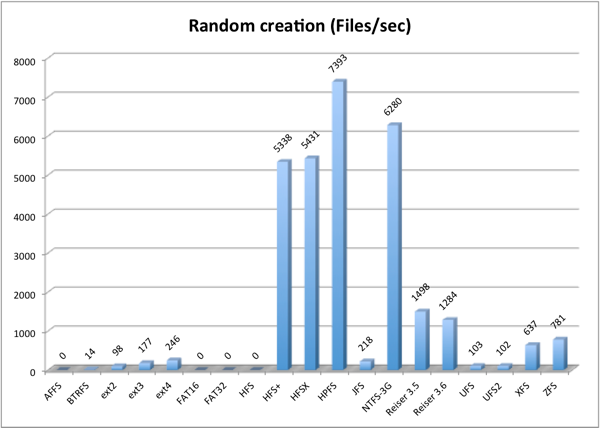

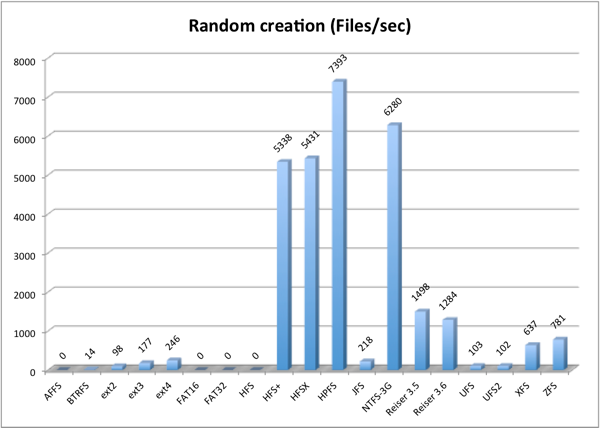

The following graphs show a more normal filesystem usage, where a lot of files are created, read and deleted at the same time.

This test shows surprising results. Reiser takes the lead on native filesystems, while even the newest BTRFS is quite behind all the other native ones.

In foreign filesystems, HPFS takes the lead. However, because of HPFS design limitations, the choice is NTFS, even being run on userspace.

In randomly reading 500 files, the results invert. NTFS loses its lead to UFS2. ext3 shows a huge improvement to ext2, and inversely, BTRFS shows a huge lose of performance.

Finally deleting all files, the lead is taken again by HPFS, second by HFS+ and HFSX.

Unless you want to write files and never delete them, the obvious choice is HFS+ or HFSX.

Surprisingly, even not being on its native implementation, HFS+ provides the best combination in all tests.

It supports all of the features required by Linux, gives a good sequential speed, and maintains its speed when handling a big number of files.

The only bad thing is that Linux does not support the journaling. Not a problem if you take care on unmounting it cleanly, without "oops! what happen to the electricity". Of course you can always spam the Linux kernel developers so they implement that function of the HFS+ filesystem that exists from 2003, 8 years ago.